From the Spark 2.x release onwards , Structured Streaming API was included . Built on Spark SQL library, It makes easier to apply SQL query(Data frame API ) or scala operations(Dataset API) on streaming data.

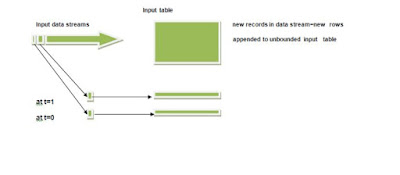

Architecture of Structured Streaming:

Structured Streaming works on the same platform like Spark Streaming of polling the data after some duration. It is based on the trigger interval, but some differences are there in structured streaming and makes it similar to real streaming. No batch concept is present in Structured Streaming. The received data in a trigger is appended to the continuously flowing data stream. Each row of the data stream is processed and the result is updated into the unbounded result table. The output result (updated, new result only, or all the results) depends on the output mode of the operations (Complete, Update, Append) we set.

i. Complete Mode

When updated result table will be written to the external sink, that mode is Complete Mode.

ii. Append Mode

In append mode only the new rows will be appended to the result table since the last trigger and we will write those rows to the external sink. This mode is applicable when existing rows in result table are not expected to change.

iii. Update Mode

In this mode, only the rows that were updated in the result table since the last trigger will be written to the external sink. Unlike complete mode, this only outputs the rows that have changed since the last trigger and it will be as same as Append mode when query doesn’t contain aggregations.

It uses checkpointing and also applies two conditions to recover from any error:

1. The source must be replayable.

2. The sinks must support idempotent operations to support reprocessing in case of failures.

With restricted sinks, Spark Structured Streaming always provides end-to-end, exactly once semantics.

1 Comments

Probably the best content,it is very much helpful ,really appreciable....

ReplyDeletePlease do not enter any spam link in the comment box