Is Apache spark a database?

Apache Spark is open source, in memory , highly expensive cluster programming framework.

Nowadays spark applications are more demanding because of its high performance on multiple domains and vast useful libraries .

Why Apache Spark preferred

1. Why is Apache spark preferred over MapReduce? It provides 100 times faster performance than hadoop MapReduce processing framework which access disk while reading and writing data in hdfs wheres spark processing does in memory.

2. It's built in API is written in multiple languages like Java,Scala,Python and R languages to get the job done.

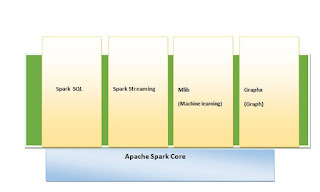

3.It includes rich set of higher level tools :

- Spark SQL: It supports API for processing SQL and structured data

- Mlib :It includes algorithm and model to train and test data in machine learning ,

- Stream processing: Spark streaming and Structured streaming are used to process streams of data in near real time applications.

- Graphx : Its used for graph processing and analytic.

Does spark compatible with Hadoop

Yes spark is compatible with Hadoop Cluster.Though spark has independent cluster spark framework(Standalone cluster) which leads to run spark jobs independently.

But spark can also be installed and worked on Hadoop(Hadoop version 2) cluster using its cluster framework Yarn and Mesos and also in Mapreduce framework (Hadoop Version 1) .Thus it enhances the functionality of Hadoop using all its components and tools.

RDD(Resilient distributed Dataset) in Spark

The basic building block of spark is RDD(Resilient distributed Dataset).It is core concept of Spark Framework.

It can hold any type of data and is distributed across the cluster.

How is RDD resilient?It is resilient because it is immutable in nature and highly fault tolerant.

Why is RDD immutable in spark? Immutable means once RDD created we cant modify the original one.we could modify only with transformation but it will recreate RDD keeping original remains same.

In cluster data gets crashed if any nodes fails but

RDDs are also fault tolerance because it knows how to recreate and recompute the datasets.

RDD supports two types of operation:

1. Transformation:

When we call transformation function on RDD, it creates new RDD.nothing is evaluated.

2. Action:

When an Action function is called on a RDD object, all the data processing queries gets computed at that time and the result value is evaluated.

reduce, collect, count, first, take, countByKey, and foreach. etc.

How Spark processes data

When Spark job is submitted at Spark console, it converts code containing transformation and actions into logical DAG(Direct Acyclic Graph) of task stages.When action is executed at higher level ,spark strikes to DAG scheduler and executes the task submitted to DAG scheduler.

Hope you are aware of how Spark gains popularity over hadoop and makes big data application faster.

Happy Learning!

Debashree

2 Comments

Thanks a lot madam for your support and content ,madam it helped me a lot to into a sonata software, madam there is no words to appreciate you .once again Thanks a lot

ReplyDeleteThank you so much for your kind words

DeletePlease do not enter any spam link in the comment box