You know that Apache sqoop which is a ingestion tool designed to

transfer data between RDBMS and Hadoop system.Let me discuss about Apache Flume.

Apache Flume is a data ingestion tool in Hadoop system and

has been developed by Cloudera to collect ,process, aggregating streaming data

from various servers and places in HDFS(Hadoop distributed file

system ) in a efficient and reliable manner.

Initially,

Apache Flume came into market to handle only log data of server. Later, it was equipped to

handle event data as well.

It is designed to perform high volume

ingestion of event based data into hadoop.

As of now we can assume one event

specifies one message which is going to be ingested in the hdfs. Once the data brings in hdfs, we

can perform own analysis on top of it.

Flume is easily integrated with other hadoop ecosystem

components including hbase and Solr.

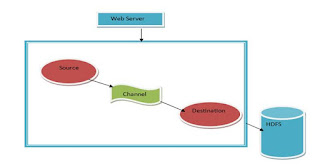

Flume Architecture:

Let

me discuss about how flume works.

·

Event: A

single message transported by flume is

known as Event.

·

Client: It generates events and sends it to one or more

agents.

·

Agent: An

agent is responsible for receiving events from clients or other agents. Afterward, it

forwards it to its sink or agent. It is a

Java virtual machine in which Flume runs and configures and hosts the source, sink

and channel.

·

Source: Source receives

data from data generators as events and writes them on one or channels. It collects

the data from various sources, like

exec, avro, JMS, spool directory, etc.

·

Sink: It is

that component which consumes events from a channel and delivers data to the

destination or next hop. Also, we can say that the sink’s destination is might be

another agent or the central stores. There are multiple

sinks available that delivers data to a wide range of destinations. Example:

HDFS, HBase, logger etc.

·

Channel: It acts as a bridge between the sources and the sinks in Flume and

responsible for buffering the data. Events are

ingested by the sources into the channel and drained by the sinks from the

channel. There are multiple channels available like memory, JDBC etc.

Now we

will see how we can configure Flume Agent .

Each

flume source and sink type having different properties which need to

be declared in configuration file respectively .

The

command for execution of configuration file(.conf file) is:

flume-ng agent

-n <flume agent name declared in .conf file>

-c conf

-f < path of configuration file in hadoop system >

Now we will be configuring flume agent

where source is avro ,channel is memory and sink is hdfs.

# Declaring source,sinks and channel of Flume agent

Agent1.sources = avrosource

Agent1.sinks = hdfssink

Agent1.channels = Mchannel

#Defining source

Agent1.sources.avrosource.type

=avro

Agent1.sources.avrosource.bind =

<Ip

address of avro source>

Agent1.sources.avrosource.port =

<port

number of avro source>

#defining

sink

Agent1.sinks.hdfssink.type = hdfs

Agent1.sinks.hdfssink.hdfs.path =

<path name in hdfs to store file>

#defining

channel

Agent1.channels.Mchannel.type = memory

Agent1.channels.Mchannel.capacity = 10000

#Binding

channel between source and sink

Agent1.sources.avrosource.channels = Mchannel

Agent1.sinks.hdfssink.channel = Mchannel

This

is all about basic understanding of flume and now you get the idea

the how to configure flume agent and execute.

please

follow ItechShree

blog

for receiving more updates.Next will give you a example how to

configure flume

as spooling directory source.

See

you in my next blog!!

1 Comments

nicr information

ReplyDeletePlease do not enter any spam link in the comment box