Hive Compression is one of the optimization technique available in Apache Hive.It is preferable for high data intensive workload where network bandwidth,I/O operations takes more time to complete.

In this blog I shall demonstrate how we can apply compression in Hive by showing you a example. Hope It would help you a lot to apply in your application .

There are different compression Codec method available to you depending on your hadoop version installed in your machine.You can use hive set property to display the value of hiveconf or Hadoop configuration values. These codecs will be displayed as comma separated form.

Here I am ,mentioning out some of them.

org.apache.hadoop.io.compress.GzipCodec

org.apache.hadoop.io.compress.DefaultCodec

org.apache.hadoop.io.compress.BZip2Codec

org.apache.hadoop.io.compress.SnappyCodec

Lets describe how to enable comression codec in Hive..

There are two places where we can enable codec in Hive system .One is through compression on intermediate process and another one is applying compression while writing final output to HDFS location using Hive query.

Compression on intermediate process:

When we query data using HiveQL then it gets converted to series of Map reduce jobs by Hive engine.Intermediate data refreshes the output from previous job which will be fed as input to the next job.

We can enable on intermediate data by using set feature in Hive session or by we can set properties in hive_site.xml which will be having permanent effect.

hive>set

hive.exec.compress.intermediate=true;

hive>set

hive.intermediate.compression.codec=org.apache.hadoop.io.compress.BZip2Codec;

hive>set hive.intermediate.compression.type=BLOCK;

Using set feature you can apply compression codec on single query depending on your choice.

<property>

<name>hive.exec.compress.intermediate</name>

<value>true</value>

</property>

<property>

<name>hive.intermediate.compression.codec</name>

<value>org.apache.hadoop.io.compress.BZip2Codec value>

<description/>

</property>

<property>

<name>hive.intermediate.compression.type</name>

<value>BLOCK</value>

<description/>

</property>

Compression while writing final output to HDFS location using Hive query:

We can enable it by using set feature as well or setting properties in hive-site.xml and mapred-site.xml files.

hive> set hive.exec.compress.output=true;

hive> set mapreduce.output.fileoutputformat.compress=true;

hive> set mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.SnappyCodec;

hive> set mapreduce.output.fileoutputformat.compress.type=BLOCK;

or

Mapred-site.xml

<property>

<name>mapreduce.output.fileoutputformat.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.output.fileoutputformat.compress.codec </name> <value>org.apache.hadoop.io.compress.SnappyCodec</value>

</property>

<property>

<name>mapreduce.output.fileoutputformat.compress.type</name> <value>BLOCK</value>

</property>

Hive-site.xml

<property>

<name>hive.exec.compress.output</name>

<value>true</value>

</property>

Let's look at the example where I have used Compression Codec in Hive

Here I have used gzip codec.

Create a table using following command and load data in table.

create table Deb_emp(empid int,ename String,dept String ) row format delimited fields terminated by '\t' lines terminated by '\n';

load data local inpath '/home/hodoop/employee.txt' into table Deb_emp;

Verify the data

select * from Deb_emp;

Setting properties in Hive for compression.

set hive.exec.compress.output=true;

set mapreduce.output.fileoutputformat.compress=true;

set mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.GzipCodec;

set hive.exec.compress.intermediate=true;

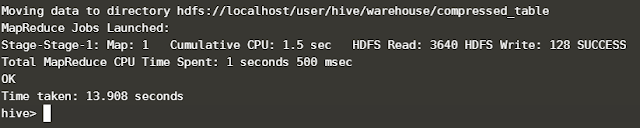

Compressed table creation

CREATE TABLE compressed_emp ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

AS SELECT * from Deb_emp;

Thus we can generate the output file in gzipped format and we can display the contents of this file with dfs -text command.

If you really get benefited please share as much as possible with others.

0 Comments

Please do not enter any spam link in the comment box