Spark dataset is distributed collection of typed objects.This was introduced in Spark 1.6 version.

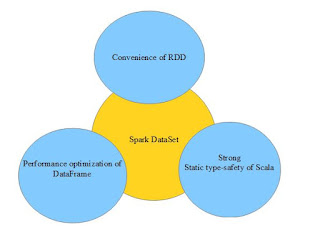

It consolidates features of both RDD and Data frame with fast execution response and memory optimization of data processing .The methodology used in DataSet is Encoder which enhances execution and processing response of data over the network compared to others which depends on JAVA or Kryo Serializer .

Dataset concept came into feature with the following advantages.

Spark Dataset Features

Thus developers can get more functional benefits working with Dataset API.

Dataset API is available only for JAVA and Scala as a single interface which reduces the burden of libraries .

As

of Spark 2.0.0,Dataframe is

currently a mere

type

alias for Dataset[Row]

.

Users use Dataset<Row>

to

represent a DataFrame

in Java

API.

Creating a Dataset in Scala:

Creating a Dataset from a RDD

val rdd = sc.parallelize(Seq(("Apple",180), ("Banana",40))) //creating rdd by calling parallelize() method on collection of data

val dataset = rdd.toDS() // converting RDD to DataSet by calling toDS() method

dataset.show() // to view the content of dataset

Creating a Dataset from a DataFrame

case class Shop(fruitname: String, quantity:Long) //creating a case class named Shop with attributes fruitname and quantity

val data = Seq(("Apple",180), ("Banana",40)) // define a collection

val df = sc.parallelize(data).toDF() //creating rdd and calling toDF() method to

convert it into dataframe

val dataset = df.as[Shop] //creating dataset by using case class reflection

dataset.show() //to view the content of dataset

Learning Coding!!

See you in next blog!

0 Comments

Please do not enter any spam link in the comment box