Here , you will be learning

- What is Sqoop and its function

- Why it is needed and its features .

- How it works

When Big Data came into picture ,it is needed to get the huge volume of data from earlier traditional relational system to Hadoop system

- Shell scripts was very slow to transfer huge amount of data ,

- Writing Map reduce code for uploading data in Hadoop system was very tedious

By this way Sqoop came to provide a solid interaction between relational database server and Hadoop’s HDFS.

Why Sqoop is needed?

1. When Sqoop came into existence, developers finds easier to write sqoop query to import export data between RDBMS and Hadoop and internally converts commands into map reduce tasks which are executed over HDFS.

2. Sqoop provides fault tolerance mechanism due to MapReduce framework used in import and export data.

What is Sqoop?

Sqoop is a tool designed to use for data transfer between RDBMS (like MySQL, Oracle SQL etc.) and Hadoop (Hive, HDFS, and HBASE etc.).

It is one of the top projects by Apache software foundation and works brilliantly with relational databases such as Teradata, Netezza, Oracle, MySQL, and Postgres etc.

Again it provides command line interface for importing and exporting the data ,So database developers just need to provide data base authentication, source, destination etc and rest of the work done by sqoop tool itself.

Features of Sqoop

· Full load : Apache sqoop can be used to transfer whole table data between relational database to hadoop system using single command .

· Incremental load: It also has the facility to transfer the updated data.

· Parallel import/ export: yarn framework is used to export and import data in sqoop . It also provides fault tolerance on top of parallelism.

· Compression: Data can be compressed by using codec algorithm etc available in sqoop .

· Loading data directly into Hive: Using Sqoop we can create a table in Hive similar to the RDBMs table in one shot.

· Connectors for all major RDBMS: Sqoop provides better performance because of having vast range of connectors for popular relational databases like MySQL, PostgreSQL, Oracle, SQL Server, and DB2.

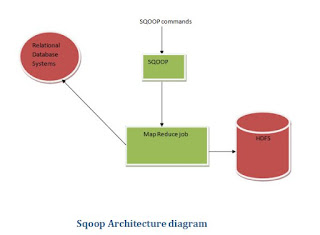

Sqoop Architecture:

Sqoop has connectors to work with a lot of popular relational databases, including MySQL, PostgreSQL, Oracle, SQL Server, and DB2.

When Sqoop commands or jobs are submitted to sqoop, it first gets converted to map tasks which brings slice of incoming data into unstructured format and place the data in HDFS. For export job, it exports the data back into RDBMS, ensuring that the schema of the data in the database is maintained.

Reduce phase is required in case of aggregation but Apache sqoop just import and export data ,doesn’t include any aggregation. Map job launch multiple mappers depending on the number defined by the users. For sqoop job, each mapper assigned a part of data to be imported and sqoop distributes whole data among all mappers equally in order to achieve high performance. Then each mapper creates a connection with the database using JDBC and fetches the part of the data assigned by sqoop and then, writes back to HDFS/Hive/Hbase based on the arguments provided in the command line interface.

This is how sqoop import and export works, like they gather the metadata again only submit map job ,reduce phase is never occurred here ,then it stores the data into HDFS storage.In case of export, data is reversed back to RDBMS.

So Sqoop import tool imports each table in the RDBMS into hadoop,each row of the table will be considered as the record in the hdfs and all the records stored as the text data in the text file or the binary data in the sequence file.On the other hand sqoop export tool will export the hadoop files back into rdbms table. Again hdfs records will be the rows of the table and those are read and passed into the set of records and delimited with user specified delimiter.

This is all about sqoop overview and I explained the architecture and flow of import and export process.

in next blog of ITechShree , I will explain about sqoop commands.

1 Comments

Very good

ReplyDeletePlease do not enter any spam link in the comment box